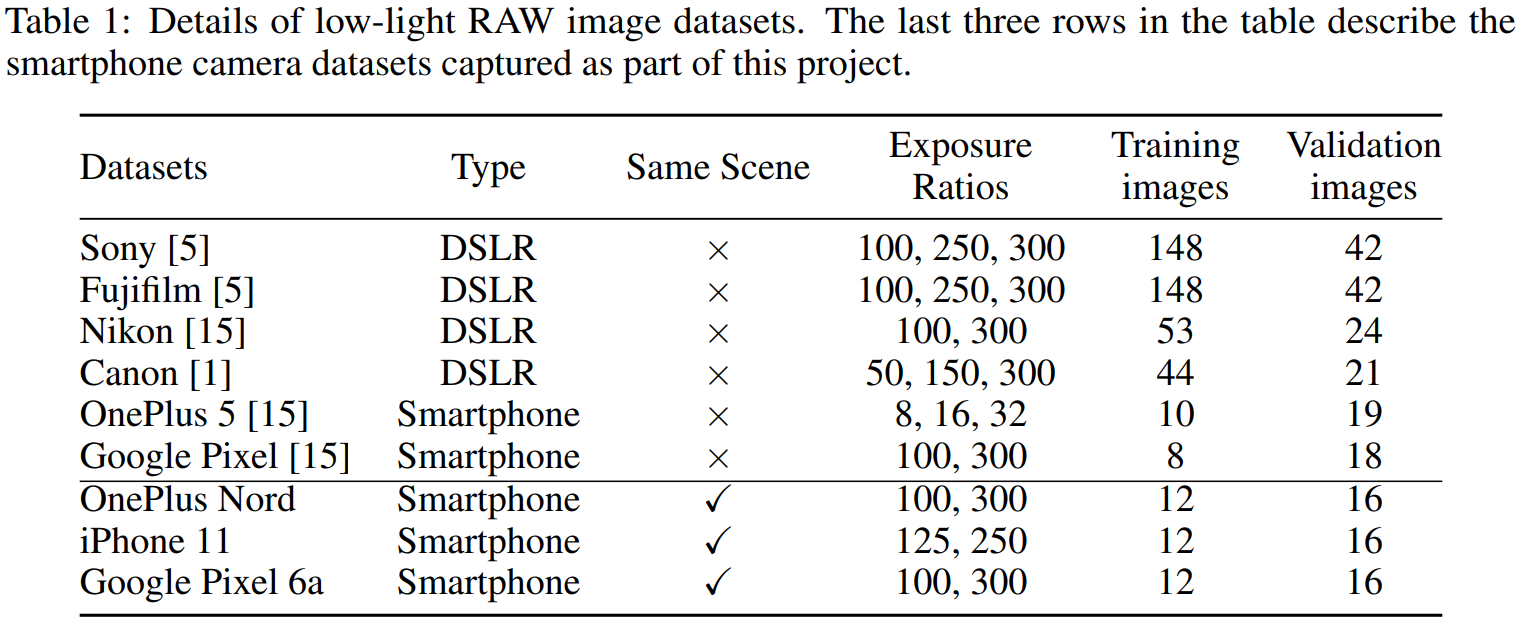

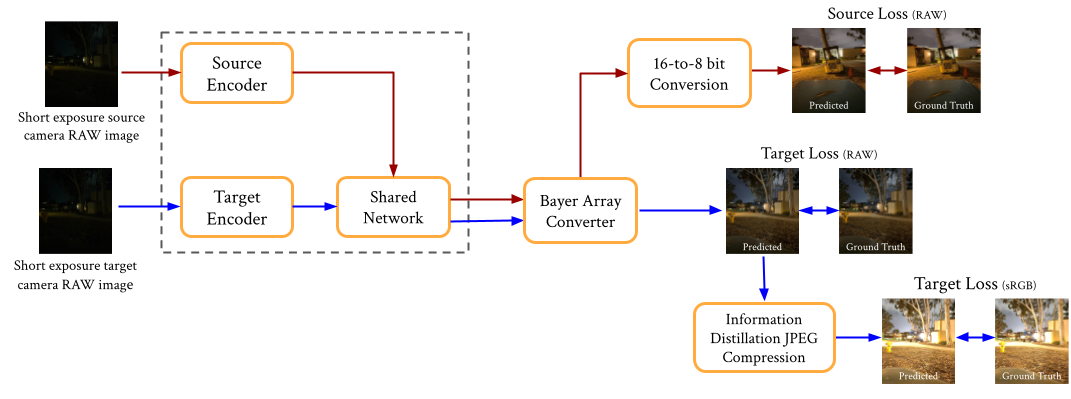

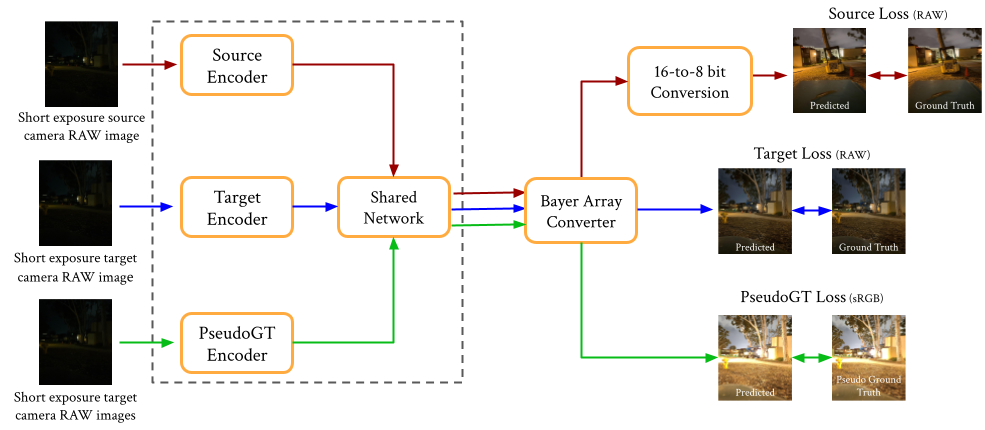

Low-light imaging is a challenging task, especially when images are captured using a smartphone camera. This is because of severe noise and low illumination resulting from the short-exposure time used in image capture. Additionally, smartphone camera sensors are low-cost and have higher degradation under such conditions and each camera can produce significantly different images for the same scene leading to a domain gap. Alleviating this domain gap requires expensive large-scale data capture. To address these challenges, we propose to utilize the linear RAW images from a DSLR camera dataset and only a handful of RAW images from a smartphone camera to perform domain adaptive low-light RAW image enhancement. Specifically, we aim to investigate raw-to-sRGB image enhancement, knowledge distillation, and enhanced denoising performance. Below we have reported our milestone updates thus far including our plan for successful execution for the remaining tasks.

Example short-exposure and long-exposure image pairs from the captured smartphone datasets. The short exposure images are almost entirely dark whereas

the long-exposure images contain scene information.

Example short-exposure and long-exposure image pairs from the captured smartphone datasets. The short exposure images are almost entirely dark whereas

the long-exposure images contain scene information.